New rules for US national security agencies balance AI’s promise with need to protect against risks

WASHINGTON (AP) — New rules from the White House on the use of artificial intelligence by U.S. national security and spy agencies aim to balance the technology’s immense promise with the need to protect against its risks.

The rules being announced Thursday are designed to ensure that national security agencies can access the latest and most powerful AI while also mitigating its misuse, according to Biden administration officials who briefed reporters on condition of anonymity under ground rules set by the White House.

Recent advances in artificial intelligence have been hailed as potentially transformative for a long list of industries and sectors, including military, national security and intelligence. But there are risks to the technology’s use by government, including possibilities it could be harnessed for mass surveillance, cyberattacks or even lethal autonomous devices.

The new policy framework will prohibit certain uses of AI, such as any applications that would violate constitutionally protected civil rights or any system that would automate the deployment of nuclear weapons.

The rules also are designed to promote responsible use of AI by directing national security and spy agencies to use the most advanced systems that also safeguard American values, the officials said.

Other provisions call for improved security of the nation’s computer chip supply chain and direct intelligence agencies to prioritize work to protect the American industry from foreign espionage campaigns.

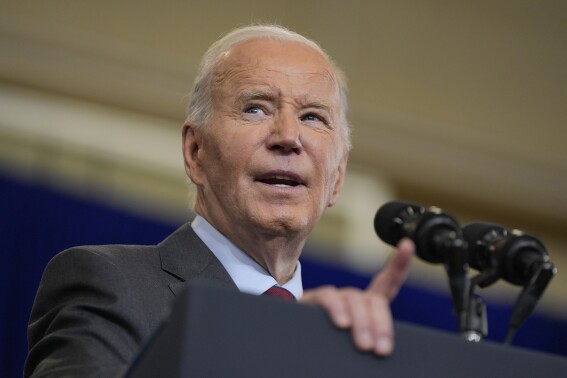

The guidelines were created following an ambitious executive order signed by President Joe Biden last year that directed federal agencies to create policies for how AI could be used.

Officials said the rules are needed not only to ensure that AI is used responsibly but also to encourage the development of new AI systems and see that the U.S. keeps up with China and other rivals also working to harness the technology’s power.